Publications

You can also find my articles on my Google Scholar profile.

Miquel Oller, An Dang, Nima Fazeli

Preprint, 2025

Hydrosoft is a computationally efficient, path-dependent, and differentiable model for simulating and controlling soft, compliant robotic components in dexterous manipulation tasks.

Samanta Rodriguez, Yiming Dou, Miquel Oller, Andrew Owens, Nima Fazeli

9th Conference on Robotic Learning (CoRL), 2025

We prenset a method for tansferring manipulation policies between different tactile sensors by generating cross-sensor tactile signals. Using either a paired diffusion model (T2T) or an unpaired depth-based approach (T2D2), the method enables zero-shot policy transfer without retraining. We demonstrate it on a marble rolling task, where policies learned with one sensor are successfully applied to another.

Mark Van der Merwe, Miquel Oller, Dmitry Berenson, Nima Fazeli

Robotic and Automation Letters (RA-L), 2025

We present a hybrid learning and first-principles approach to model deformable tools dexterously manipulating rigid objects, capturing simultaneous motion, force transfer, contacts, and both intrinsic and extrinsic dynamics.

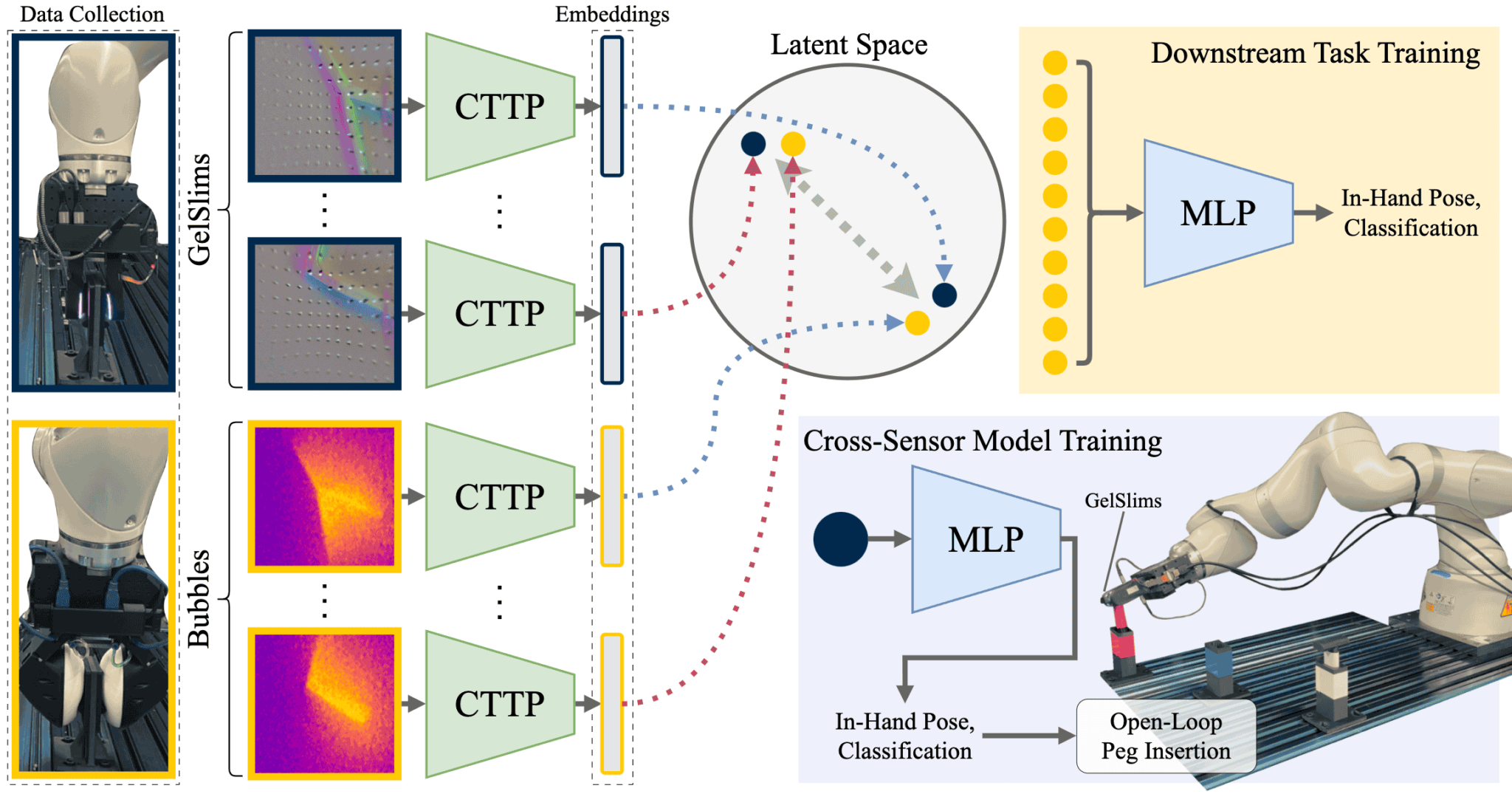

Samanta Rodriguez, Yiming Dou, William van den Bogert, Miquel Oller, Kevin So, Andrew Owens, Nima Fazeli

International Conference on Robotics and Automation (ICRA), 2025

We present a contrastive self-supervised learning method to unify tactile feedback across different sensors, using paired tactile data. By treating paired signals as positives and unpaired ones as negatives, our approach learns a sensor-agnostic latent representation, capturing shared information without relying on reconstruction or task-specific supervision.

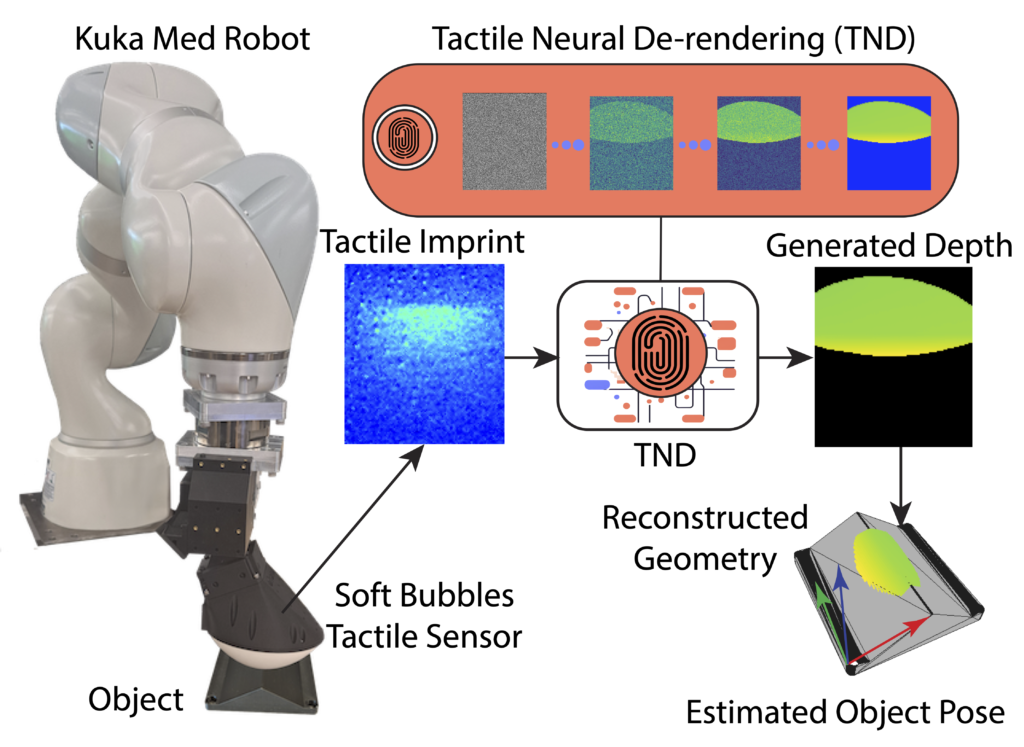

J.A. Eyzaguirre, Miquel Oller, Nima Fazeli

Preprint, 2024

We introduce Tactile Neural De-rendering, a novel approach that leverages a generative model to reconstruct a local 3D representation of an object based solely on its tactile signature.

Samanta Rodriguez, Yiming Dou, Miquel Oller, Andrew Owens, Nima Fazeli

Preprint, 2024

The diversity of touch sensor designs complicates general-purpose tactile processing. We address this by training a diffusion model for cross-modal prediction, translating tactile signals between GelSlim and Soft Bubble sensors. This enables sensor-specific methods to be applied across sensor types.

Youngsun Wi, Jayjun Lee, Miquel Oller, Nima Fazeli

8th Conference on Robotic Learning (CoRL), 2024

We propose a Physics-Informed Neural Network (PINN) approach for solving inverse source problems in robotics, jointly identifying unknown source functions and system states from partial, noisy observations. Our method integrates diverse constraints, avoids complex discretizations, accommodates real measurement gradients, and is not limited by training data quality.

Miquel Oller, Dmitry Berenson, Nima Fazeli

Robotic Science and Systems (RSS), 2024

We consider the problem of non-prehensile manipulation with highly compliant and high-resolution tactile sensors. Our approach considers contact mechanics and sensor dynamics to achive desired object poses and transmitted forces and is amenable for gradient-based optimization.

Miquel Oller, Dmitry Berenson, Nima Fazeli

7th Conference on Robotic Learning (CoRL), 2023

Our paper introduces TactileVAD, a decoder-only control method that resolves tactile geometric aliasing, improving performance and reliability in touch-based manipulation across various tactile sensors.

Miquel Oller, Mireia Planas, Dmitry Berenson, Nima Fazeli

6th Conference on Robotic Learning (CoRL), 2022

Our method learns soft tactile sensor membrane deformation dynamics to control a grasped object’s pose and force transmitted to the environment during contact-rich manipulation tasks such as drawing and in-hand pivoting.

Nima Fazeli, Miquel Oller, Jiajun Wu, Zheng Wu, J. B. Tenenbaum, Alberto Rodriguez

Science Robotics, 2019

This work introduces a methodology for robots to learn complex manipulation skills, such as playing Jenga, by emulating hierarchical reasoning and multisensory fusion through a temporal hierarchical Bayesian model. By leveraging learned tactile and visual representations, the robot adapts its actions and strategies similar to human gameplay.