Cross-Sensor Touch Generation

Samanta Rodriguez, Yiming Dou, Miquel Oller, Andrew Owens, Nima Fazeli

Published in 9th Conference on Robotic Learning (CoRL), 2025

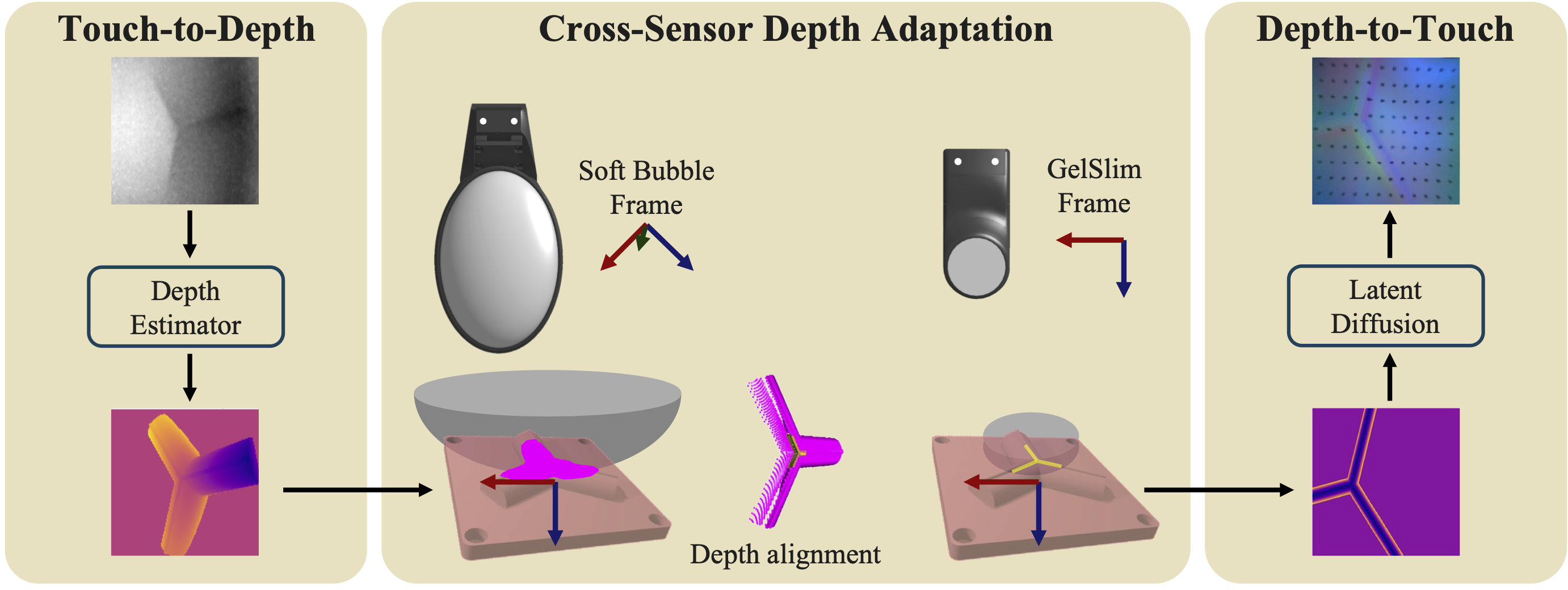

The constant evolution of tactile sensors makes it challenging to transfer policies across different tactile sensors. We aim to enable tactile policy reutiliztion by generating tactile signals cross-sensors. This is achieved via two main approaches: (1) Touch2Touch (T2T) – a one-stage diffusion-based model trained on paired sensor data to directly translate tactile images between sensors, and (2) Touch-to-Depth-to-Touch (T2D2) a two-stage unpaired method that uses a shared depth representation to bridge the gap between sensors. These approaches allow existing models—whether learned via Reinforcement Learning (RL) or Imitation Learning (IL)—to be reused with new sensors without retraining.

The effectiveness of the proposed framework is demonstrated on a marble rolling task, where policies trained using one sensor are successfully executed using another through the generated tactile signals. This validates that the generative translation accurately preserves the contact information critical for manipulation. The study also evaluates performance using both visual metrics (PSNR, SSIM, FID) and functional outcomes (e.g., object pose estimation), showing that the method, enables zero-shot policy transfer across heterogeneous tactile hardware in real-world tasks like cup stacking and insertion.

Project website: Cross-Sensor Touch Generation